Javid S. Aliyev

View the Project on GitHub JohnnyAliyev/JohnnyAliyev-github.io

Machine Learning / AI Engineer

About Me and My Work

Welcome to my portfolio! I specialize in leveraging machine learning to solve complex problems and deliver innovative solutions. My projects showcase my ability to adapt existing models and frameworks, enhancing them with my unique ideas and insights. While some of the work involves building upon established projects, my contributions include integrating novel approaches, optimizing performance, and applying creative problem-solving skills. Each project reflects my commitment to advancing technology and delivering impactful results.

Projects

Visualizing Transparency: Dimensional Reduction of Glass Composition Data Using t-Distributed Stochastic Neighbor Embedding Techniques (T-SNE)

This project focuses on reducing dimensions and visualizing the Glass Identification Data Set using t-Distributed Stochastic Neighbor Embedding (t-SNE). By transforming the dataset, which consists of 214 instances and 9 chemical attributes, into an intuitive two-dimensional representation, we aim to facilitate effective visualization and exploration of glass types.

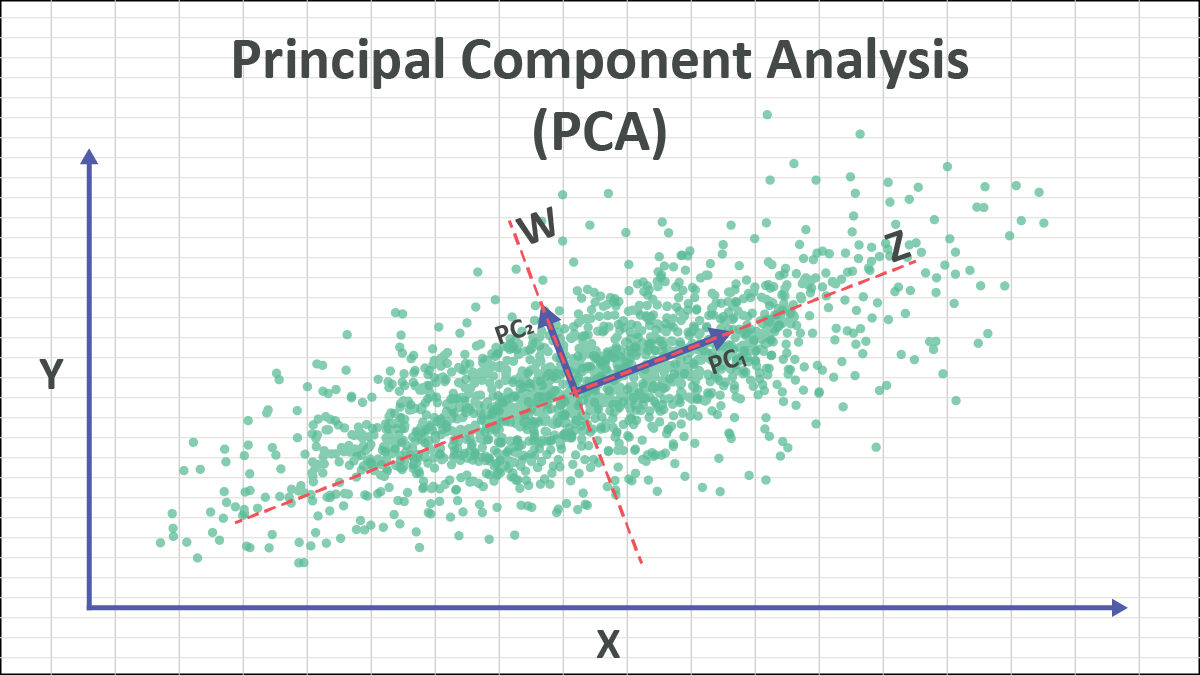

PCA Insights: Simplifying Glass Dataset

The aim of this project is to use Principal Component Analysis (PCA) exclusively for dimensionality reduction on the Glass Identification Data Set from UCI. This dataset consists of 214 instances and 9 attributes related to glass composition. By applying PCA, the project seeks to simplify the dataset while retaining its essential characteristics, facilitating further analysis of glass classification.

Outlier Detection in Substance Use Patterns Using Isolation Forest Algorithm

This project aims to find outliers in substance use patterns through the Isolation Forest algorithm applied to a dataset of 1885 respondents.

Advanced Clustering Analysis of Wine Chemistry Using Hierarchical Clustering and DBSCAN Methods

This project leverages an adapted UCI Wine Data Set for unsupervised learning. The dataset, which contains 13 chemical attributes of wines from the same region, is used to explore hidden patterns through Hierarchical and DBSCAN clustering algorithms.

Finding Optimal Client Clusters Using the K-Means Algorithm

This project involves utilizing the K-Means algorithm to segment clients of a wholesale distributor of gourmet food products. By analyzing yearly client data, the goal is to identify distinct customer groups to help the company tailor its services and strategies more effectively.

Segmenting Shoppers with K-Means: Uncovering Customer Clusters in FMCG Purchases

This project uses K-means clustering to identify distinct customer segments from data collected via loyalty cards at an FMCG store. By analyzing attributes such as age, income, and occupation, we aim to reveal meaningful patterns to enhance marketing strategies and store offerings.

Estimating University Admission Probability by employing Multiple Linear Regression

This project develops a regression model to predict the chances of getting into a graduate program. We use a dataset with features like GRE and TOEFL scores, university rating, and GPA. The goal is to estimate the probability of admission based on these factors.

Predicting House Prices by employing ANN

This project focuses on predicting house prices in King County, USA, using a dataset from Kaggle covering sales from May 2014 to May 2015. The dataset includes features like the number of bedrooms, bathrooms, square footage, and more. The goal is to build a model that estimates house prices and reveals key factors influencing the housing market during this period.

Predicting Stock Price based on Interest Rates, Employment by employing Multiple Linear Regression

This project is a basic introduction to multiple linear regression. We use a straightforward dataset with three columns: Interest Rates, Employment, and S&P 500 Price. The objective is to predict the S&P 500 Price based on Interest Rates and Employment. For simplicity, we also included an evaluation model to check the accuracy of our predictions.

Predicting Malignant or Benign Tumors Using Support Vector Machines (SVM)

This project uses Support Vector Machines (SVM) to classify tumors as benign or malignant based on 30 features.

Advertisement Click Forecasting Using Logistic Regression

This project aims to predict which users are likely to click on advertisements using logistic regression. We analyze customer data to build a model that helps optimize ad targeting and marketing strategies.

Finding the Nearest Class Using KNN

This project uses the k-Nearest Neighbors (KNN) algorithm to predict T-shirt size based on height (in cm) and weight (in kg). The model finds the nearest neighbors to classify the T-shirt size accurately.

Robust Plant Classification Utilizing the K-Nearest Neighbors (KNN) Algorithm

This project aims to predict the species of iris plants using their features. The dataset includes 150 samples across three species: Iris Setosa, Iris Versicolour, and Iris Virginica. Each sample is described by sepal length, sepal width, petal length, and petal width.

Utilizing Decision Tree Analysis for Classifying Amazon Alexa Review Ratings

This project utilizes Decision Tree Analysis to classify Amazon Alexa review ratings. The dataset includes nearly 3,000 reviews with star ratings, review dates, product variants, and feedback.

Applying Ridge and Lasso Regression for Regularized Linear Models

In this project, we use Ridge and Lasso Regression to improve linear models. Ridge Regression helps manage complexity by shrinking coefficients, while Lasso Regression simplifies models by eliminating less important features. These techniques enhance both the accuracy and interpretability of our predictions.

Predicting Purchases with SVM Classifier

This project uses a Support Vector Machine (SVM) classifier to predict whether a customer will make a purchase based on their age and estimated salary. By analyzing these two features, the model classifies potential buyers, helping businesses target relevant customers more effectively. The dataset includes binary labels indicating whether each individual made a purchase or not.

Logistic Regression Model for Predicting the Survival Chances of Titanic Passengers

This project applies Logistic Regression to predict the survival of passengers on the Titanic. By analyzing various features such as age, gender, passenger class, and fare, the model classifies whether a passenger is likely to survive the disaster. Logistic Regression is used due to its suitability for binary classification problems, making it ideal for this task of predicting survival outcomes.

Developing a Logistic Regression Model for Predicting Breast Tumor Diagnosis Based on Tumor Characteristics

This project employs Logistic Regression to predict the diagnosis of breast tumors based on tumor characteristics such as size, shape, and texture. The model classifies tumors into benign or malignant categories, aiding in early detection and diagnosis. Logistic Regression is chosen for its effectiveness in binary classification tasks, making it suitable for distinguishing between the two tumor types.

Classification Models for Crop Recommendation Based on Soil and Climate Data

This project utilizes various classification models to recommend suitable crops based on soil conditions and climate data. By analyzing factors such as soil type, moisture levels, and temperature, the models predict which crops will thrive in specific environments. These recommendations aim to optimize agricultural yield and resource use, assisting farmers in making informed decisions for crop selection.

Predicting Revenue based on Temperature by employing Simple Linear Regression

We used simple linear regression to predict revenue based on temperature. To check the model's accuracy, we created a Q-Q plot to test the normality of residuals, a leverage plot to find influential points, and a residual plot to check for consistent variance.

Predicting Salary based on Years of Experience by employing Polynomial Regression

We used polynomial regression to predict salary based on years of experience. This approach allows for modeling more complex relationships between experience and salary. Diagnostic checks included plotting residuals to assess model fit and ensure reliable predictions.

Car Price Estimation Using Artificial Neural Networks

Estimating car prices accurately is difficult due to factors like model, mileage, and year. This project uses Artificial Neural Networks (ANNs) to develop a predictive model that leverages historical data to deliver more precise price predictions, providing valuable insights for buyers and sellers in the automotive market.

CNN-Based Image Classification Using the CIFAR Dataset

This project uses Convolutional Neural Networks (CNNs) to classify images from the CIFAR dataset. By leveraging CNNs' ability to capture intricate patterns in images, the goal is to accurately categorize images into predefined classes, enhancing image classification performance.

CrimeTrend Forecasting with Facebook Prophet: Analyzing Chicago’s Criminal Data (2001-Present)

This project uses Facebook Prophet to forecast crime trends in Chicago based on data from 2001 to the present. The goal is to identify patterns and predict future crime rates for better decision-making.

Leveraging LeNet CNN for Effective Traffic Sign Classification and Recognition

This project utilizes the LeNet CNN architecture to classify and recognize traffic signs, aiming to accurately identify various traffic sign types using deep learning techniques.

Email Spam Detection and Classification Using Naive Bayes Algorithm: Enhancing Email Filtering Efficiency

This project applies the Naive Bayes algorithm to detect and classify spam emails, enhancing email filtering by distinguishing between spam and legitimate messages.

Utilizing the Naive Bayes Method to Predict Credit Card Fraud

This project uses the Naive Bayes method to predict credit card fraud. The dataset includes 284,807 transactions from European cardholders, with 492 identified as fraudulent. Features are numerical and PCA-transformed, except for 'Time' and 'Amount,' which provide context for each transaction.

Harnessing Naive Bayes and Natural Language Processing for Yelp Comment Analysis

This project applies Naive Bayes and Natural Language Processing to analyze Yelp comments, aiming to classify sentiment and extract meaningful insights from reviews.

Pearson Correlation-Based Recommendation System for Accurate Suggestions

This notebook implements a recommendation system based on Pearson correlation. It calculates similarities between items to provide personalized recommendations tailored to user preferences.

Leveraging XGBoost for Enhanced Precision in Supermarket Sales Forecasting

This project employs XGBoost to predict supermarket sales, aiming for accurate forecasts by analyzing historical data and utilizing machine learning techniques.

Customer Grouping with K-Means

This project employs K-Means clustering to categorize customers by income and spending scores, aiming to identify distinct groups for more targeted marketing.

Resume

For a detailed overview of my professional experience, skills, and education, please download my resume:

Feel free to review it for more information about my background and qualifications.